Abstract

Standard convolution operations can effectively perform feature extraction and representation but result in high computational cost, largely due to the generation of the original convolution kernel corresponding to the channel dimension of the feature map, which will cause unnecessary redundancy. In this paper, we focus on kernel generation and present an interpretable span strategy, named SpanConv, for the effective construction of kernel space. Specifically, we first learn two navigated kernels with single channel as bases, then extend the two kernels by learnable coefficients, and finally span the two sets of kernels by their linear combination to construct the so-called SpanKernel. The proposed SpanConv is realized by replacing plain convolution kernel by SpanKernel. To verify the effectiveness of SpanConv, we design a simple network with SpanConv. Experiments demonstrate the proposed network significantly reduces parameters comparing with benchmark networks for remote sensing pansharpening, while achieving competitive performance and excellent generalization. Code is available at https://github.com/zhi-xuan-chen/IJCAI-2022_SpanConv.

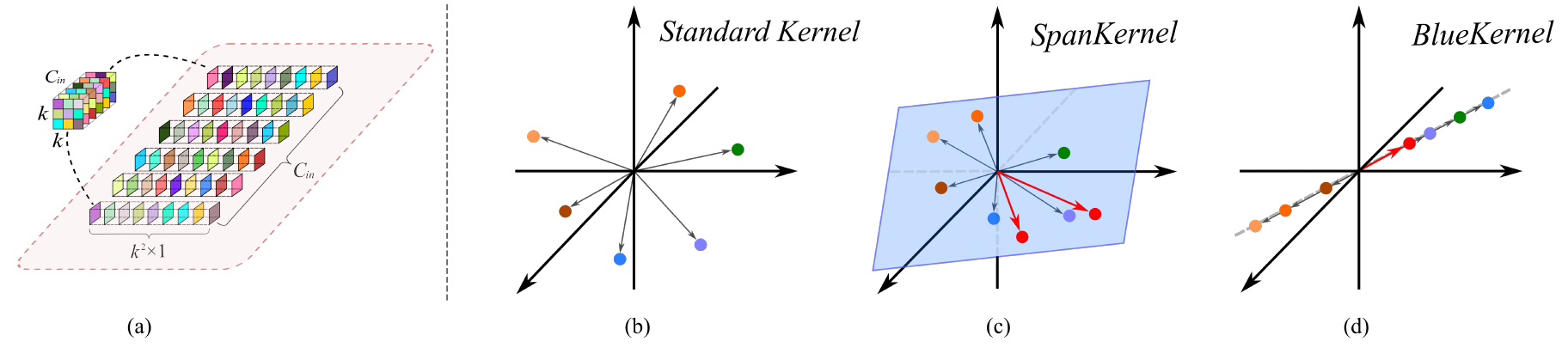

Schematic Diagram of the Proposed Module

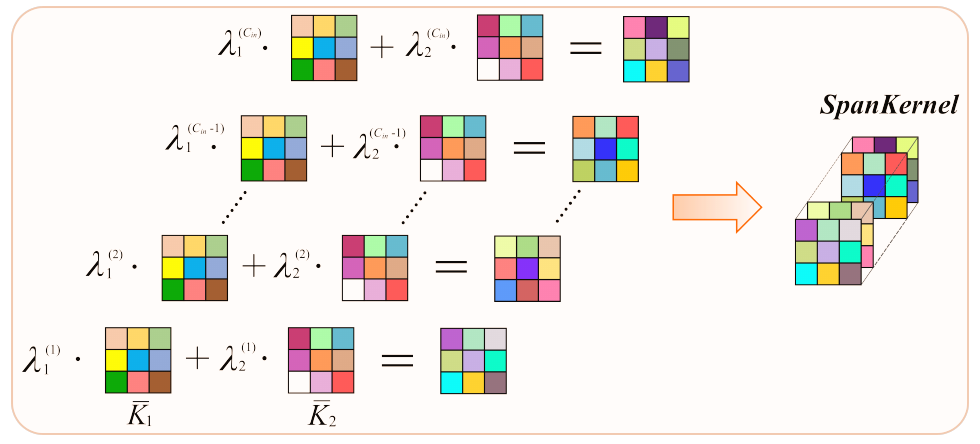

The generation detail of our proposed SpanConv is presented below:

We first generate two navigated kernels, then extend them to input channels with learnable coefficients. It is clear that the SpanKernel is formulated by the linear combination of two navigated kernels. The comparison of standard convolution kernel, the proposed SpanKernel, and prior BlueKernel proposed by Haase and Amthor, 2020 are shown below:

For the technical detail, please refer to the original paper.

Downloads

Full paper: click here

Supplementary: click here

PyTorch code: click here

Reference

@inproceedings{chen2022spanconv,

title={SpanConv: A new convolution via spanning kernel space for lightweight pansharpening},

author={Chen, Zhi-Xuan and Jin, Cheng and Zhang, Tian-Jing and Wu, Xiao and Deng, Liang-Jian},

booktitle={Proc. 31st Int. Joint Conf. Artif. Intell.},

pages={1--7},

year={2022}

}